Recently, the entertainment industry has been facing its first crisis due to "AI fabrication."

Before any confirmation can be made, the image is damaged, public opinion changes suddenly, and activities are suspended. In the past, rumors did not spread as quickly or as threateningly as they do now. However, from now on,

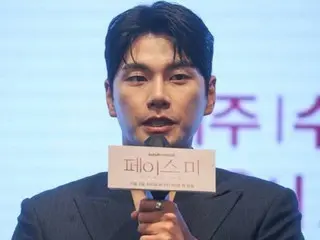

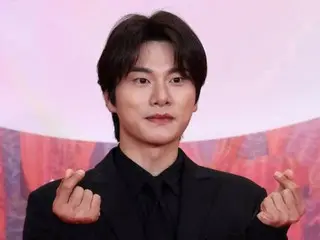

Several composite images have been disguised as "evidence that looks real," putting celebrities' livelihoods in jeopardy. Actor Lee Yi Kyung, model Moon Ga Bi, actor Lee Jung Jae, and others have been victims one after another.

As cases continue to emerge, this situation is no longer just a case of one or two people being unlucky, but a risk to the entire entertainment industry.

Fabrication is fleeting, but the ripples are long-lasting... Why are AI rumors so dangerous?

AI fabrication has gone far beyond simple "rumor generation." The most representative example is the rumor surrounding Lee Yi Kyung's private life. It started with a post on social media by a foreign woman.

The suspicions quickly spread beyond reason, with images, videos, and even conversations being synthesized by AI. The woman belatedly apologized, saying, "The documents were fabricated by AI," but

Lee Yi Kyung has already been removed from two variety shows. The fabrication was a swift blow, but the blow has had an impact on his future activities. However, the woman recently said, "I was not sued.

"I'm debating whether to release the verification shot. It would be a bit weird to let it end like this. I'm disappointed that it's not an AI," he said, causing a stir.

The Moon GaBi case is similar: as soon as I posted a picture of my son from behind, someone used AI to generate a picture of him from the front.

This is not simple image manipulation, but rather digital stalking that violates the family's privacy, which is why Moon GaBi was so angry.

Lee Jung-jae is a more direct example of a victim. His face was used as an AI ID card in a romance fraud case, which actually resulted in a victim.

The artist company immediately issued a statement, saying, "Fake videos in which the faces and voices of our actors have been fabricated using AI technology are being circulated, which is clearly an illegal act," and threatened to file criminal charges.

This case shows that AI fabrications go beyond simple image defamation and can be misused as a criminal tool.

For celebrities, their "image" is the foundation of their existence.

It will have long-term ripple effects on advertising contracts, future work offers, the trust of fans, and even the value of a global brand.

It is too early for talent agencies to respond...realistic limitations conveyed by those inside the industry The entertainment industry already has a system in place that focuses on lawsuits and accusations. However,

The prevailing view is that current AI technology is difficult for talent agencies to deal with on their own. One industry insider said, "Everyone in the industry is taking this very seriously.

"As false content becomes more sophisticated and realistic, portrait rights and reputations are being seriously damaged. We are maintaining a system for immediate litigation and reporting, centered on our legal team."

However, he pointed out the "structural limitations" that the industry has faced. "AI is spreading so quickly that ordinary people can easily create deep content.

It's a difficult environment. There are limits to what the agency can do alone. Only by providing information to fans, strengthening platform monitoring, and implementing government regulations can we effectively address the issue."

This statement means that dealing with DeepFei is not just a problem for the entertainment industry, but a problem for the entire ecosystem. In particular, the biggest risk is the lack of a role for platforms.

The structure that distributes and spreads AI content has been left unattended, and the system for protecting entertainers has remained a "post-event response." The industry is unanimously saying that the following institutional arrangements are urgently needed:

▲ Mandatory display of AI-generated content ▲ System for immediate deletion of deep content ▲ Strengthened punishment for malicious creators and distributors ▲ Mandatory monitoring system for platform formers.

The lack of a system is a risk that cannot be overlooked any more, as it can lead to crimes such as the Lee Jung-jae fraud case.

A new system for protecting celebrities in the age of AI must be designed.

The biggest problem facing the entertainment industry is the lack of a "future-oriented response system."

However, most measures are taken only after the fact, and they are unable to keep up with the speed at which fabrications spread, let alone minimize the damage.

In particular, rumors of an AI-based platform pose a threat to the artist's overall branding.

The source said, "The rumors about Deep Fei are devastating to the brand value and overall activities. They have led to substantial damage, such as the cancellation of advertising contracts and the suspension of activities. Fans are also suffering great mental anguish."

He also presented specific directions for improvement, saying, "It is necessary to make the display of AI content mandatory and to strengthen the penalties for those who create and distribute it. I also think it is necessary to create a joint industry response system."

"In particular, newcomers who are scheduled to debut in the future, artists who are about to enter the global market, and entertainers who must continue their activities based on the trust of their brand for the long term are in this situation.

In the age of AI fabrications, it may become even more vulnerable. What the entertainment industry needs in the future is not a simple system for filing complaints and accusations, but a system that platforms, governments, and the entertainment industry can build together.

It is an "infrastructure for protecting celebrities." The time is approaching when it will no longer be possible to deal with this as an individual issue.

2025/11/19 21:03 KST

Copyrights(C) Herald wowkorea.jp 111